This post isn't a comprehensive blow-by-blow of GPT-5.2; I'm just looking at how it performs in a typical business use case compared to some alternative models we're using today in various projects.

Other Resources

For a more complete understanding of the broad spectrum of what's new in gpt-5.2, and what it means, I suggest check out these two sources:

- Matthew Berman posted a YouTube video first take on how 5.2 advances the OpenAI features and capabilities. Deep dive into stats and examples of advanced prompting features.

- Nate Jones is my go-to on what it means when these new advances enter the market. Check out this newsletter edition. He also has a YouTube channel that's worth checking out.

My Quick Tire-Kicking Test

In this post I'm sharing some "tire kicking" I did with GPT 5.2, and comparing it apples-to-apples in one of the application domains I've done a lot of work in – contract analysis using LLMs.

Test Setup

The test framework is a codebase available here in my Github Repo. The test is a simple command-line python app that uses OpenAI's Structured Model Outputs API to extract various terms and content from business contracts.

In this post I used the sample contract MEDIWOUNDLTD_01_15_2014-EX-10.6-SUPPLY AGREEMENT from the CUAD_v1 example contracts dataset.

For the head-to-head test I focused on the "Summary" data element – which is a prompt to extract a brief summary of the entire contract. This is an interesting field to focus on since it's the one that asks the LLM to read the entire contract but summarize it briefly. I like to use it because I can see which details various models pay attention to and how deeply the model reasons within the contract before creating the summary.

class Contract(BaseModel):

summary: str = Field(

...,

description=(

"A factual, objective summary of the contract in 2-4 sentences. "

"Include: what the agreement is about, the main obligations, and key terms. "

"Write in third person without pronouns (they/it/this). "

"Example: 'The agreement establishes a partnership between Company A and Company B for software development services. "

"Company A will provide development resources while Company B provides project management. "

"The contract runs for 2 years with a total value of $500,000.'"

),

)

# other extraction definitions follow...Test Results

Overall, I like GPT 5.2's summary--a lot. Below is the output of each model I tested, and a quick hot-take on how each compares to the others.

Llama-3.1-8B-Instruct-FP8

Llama 3.1 provided a good summary – definitely great considering the size and low cost to run the model.

This response is a high-level, surface summary that correctly identifies the parties and purpose but lacks the specificity, structure, and legal nuance shown by all the larger models.

gpt-4.1

Next I tried gpt-4.1, which is still a go-to model for the teams I work with. This sits in the middle ground—clearer and more legally framed than Llama-3.1, but less detailed and less contract-aware than gpt-oss-20b and the GPT-5 series.

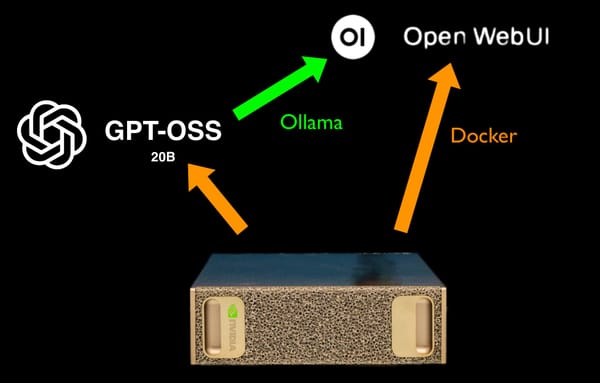

gpt-oss-20b

Next up is Open AI's open weight model, which also produced a nice output. Compared to Llama-3.1 and GPT-4.1, this model goes deeper into commercial and operational details (pricing, termination windows, obligations), but is less precise than GPT-5.x in legal structure and conditions precedent.

gpt-5.1

The premium OpenAI model until today. This response provides a well-balanced, lawyer-style summary with strong operational and regulatory detail, clearly outperforming GPT-4.1 and gpt-oss-20b in completeness while remaining concise.

gpt-5.2

Now we finally get to the new frontier model. Compared to gpt-5.1, this version adds the most contract-specific nuance (conditions precedent, exclusivity mechanics, termination triggers), making it the most faithful and legally precise representation of the agreement overall.